As AI transforms industries, businesses are under increasing pressure to stay ahead of regulatory challenges. One major shift occurred this summer: on August 1, 2024, the EU AI Act entered into force and became “the world’s first-ever horizontal regulatory framework for AI,” setting new rules for how AI systems can be developed, deployed and managed. For organizations that rely on or will rely on AI, understanding and adhering to these regulations is critical—but not without its complexities.

Our exclusive webinar with Atrak Yadegari from Deloitte and Guido Bilstein from Collibra explored the key requirements of the EU AI Act and fast-track strategies to accelerate your compliance readiness.

Let’s dive into the key talking points from the webinar.

In this blog post, we’ve provided a concise summary of what was discussed, along with a few selected excerpts to give you deeper insights.

The AI Act at a glance

The EU AI Act ensures that AI systems are safe, transparent and human-centric while fostering innovation.

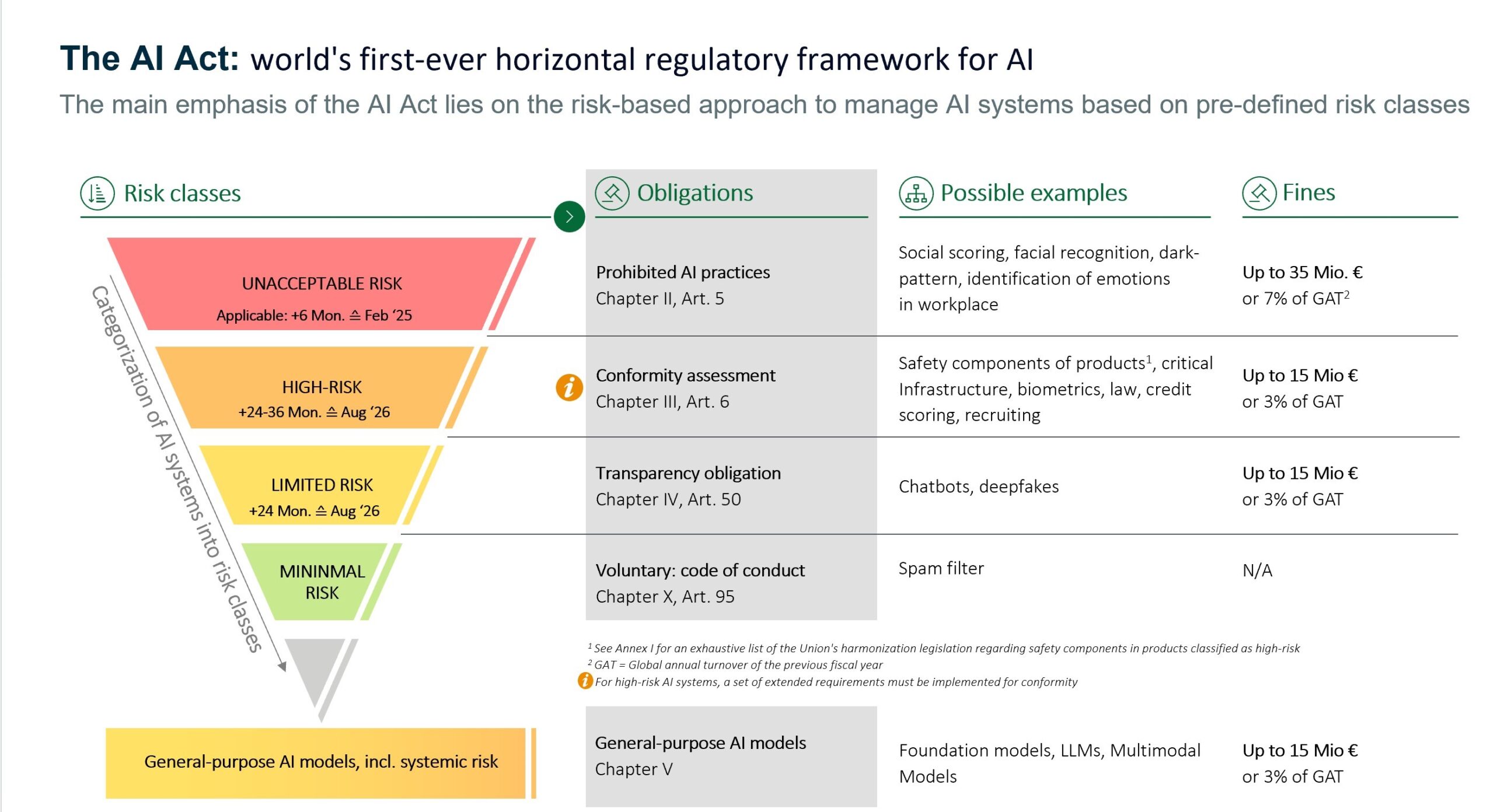

The Act categorizes AI systems into four risk tiers—unacceptable, high, limited and minimal risk—and imposes different obligations based on the associated risks of each category. Systems classified as high risk, such as biometric identification tools or AI used in employment decisions, face the most stringent compliance measures. In contrast, minimal risk systems, like spam filters, are largely unaffected.

Businesses developing or using general-purpose AI models face even more significant obligations. These models must comply with rules for data governance, technical documentation and risk management throughout their lifecycle. Failing to meet these standards could result in severe financial penalties, with fines as high as €35 million or 7% of global annual turnover for the most serious offenses.

With the Act now in force, the question isn’t whether you should comply—it’s how you’ll do it.

Organizations must quickly assess their AI systems, understand the risks involved, and prepare for compliance.

The penalties for non-compliance

The cost of non-compliance under the EU AI Act is too high to ignore. Failure to meet the Act’s requirements can result in severe financial penalties, with fines reaching up to €35 million or 7% of global annual turnover for the most egregious violations. High-risk AI systems that don’t adhere to compliance measures can see fines of €15 million or 3% of global turnover, while inaccurate or incomplete documentation can also lead to hefty fines.

Beyond penalties: Understanding operational and reputational risks

Penalties are crucial for enforcing compliance, but companies should view them as more than deterrents. They exist to encourage healthy, proactive governance practices that go beyond merely avoiding fines.

AI necessitates a dynamic approach to oversight that goes beyond a purely regulatory interpretation of governance issues.

Operational risks, such as the degraded performance of an AI use case in production or the failure of a chatbot system that isn’t fed with updated data can impact a company’s effectiveness and brand image. Even though, from a regulatory standpoint, the obligations may be less stringent for specific applications like chatbots, which fall into the minimal risk category.

By adopting a forward-thinking approach, businesses can enhance their reputation, build trust and create lasting value. Governance leaders should be seen as a legal requirement and an opportunity to strengthen ethical data and AI governance practices in their systems.

Why a unified platform for AI and data governance is key

One of the most compelling aspects of using Collibra is that it allows organizations to manage data and AI governance within a single platform. This integrated approach reduces complexity, eliminating the need to juggle multiple tools and systems to meet the various requirements of the EU AI Act or other data-driven regulations.

Having a single source of trust for data and AI governance simplifies compliance. Organizations can track their AI systems from development to deployment, monitor data quality, enforce access controls, and ensure that all necessary documentation is up-to-date—all within the same platform. This holistic view makes it easier to demonstrate compliance during audits and reduces the administrative burden on teams.

How Collibra helps accelerate AI Act readiness

Navigating the requirements of the EU AI Act can seem daunting. The administrative burden is significant between cataloging AI use cases, managing data risks, and ensuring ongoing monitoring and transparency. However, organizations can streamline these data and AI compliance processes at once by relying on a data and AI governance platform like Collibra and alleviating the manual effort involved.

Through our demo, here’s how Collibra can fast-track your compliance readiness with specific AI Governance Capabilities.

1. Catalog AI use cases and validate data

The EU AI Act mandates strict transparency for all high-risk AI systems, including detailed documentation of how AI models are developed and used. With Collibra’s pre-built model cards, organizations can easily catalog their AI use cases, ensuring that each project is properly documented and ready for compliance assessments.

More importantly, Collibra enables continuous validation of the data underlying AI models, ensuring that it remains accurate, reliable and compliant over time. This continuous monitoring is crucial, particularly for high-risk AI systems, where organizations must demonstrate compliance not just at deployment but throughout the entire lifecycle of the AI model.

2. Streamline compliance workflow across teams

Meeting the EU AI Act’s requirements isn’t just the responsibility of compliance officers or privacy managers—it requires a teamwork across multiple departments, including IT, legal and operations. Collibra facilitates this by automating workflows and providing a centralized platform for collaboration. In this respect, privacy and governance managers should be involved in understanding and getting involved as early as possible in the dynamics of an AI lifecycle. This end-to-end transparency ensures that all stakeholders can collaborate effectively, aligning on compliance goals and reducing the risk of miscommunication or gaps in governance, no matter the underlying technology.

3. Mitigate data risk and protect sensitive information

Under the EU AI Act, organizations must implement robust data governance practices, mainly when dealing with sensitive or personal data. Collibra’s platform automatically discovers and classifies sensitive data across your organization’s AI systems, allowing you to manage access controls and reduce the risk of unauthorized use.

What’s next?

The EU AI Act has already been enacted and organizations must take immediate steps to ensure they’re prepared. The key to success lies in proactive preparation.

Mapping out your AI systems, assessing risk levels and implementing the necessary governance frameworks will help you stay ahead of the compliance curve. Collibra’s unified platform provides the tools to manage AI governance seamlessly, giving organizations the confidence to navigate this new regulatory landscape. Deloitte can assist you with transforming your organization and processes as well as manage the integration with other risk governance functions.

***

Interested in learning more about the EU AI act? You can listen to the on-demand webinar recording here

Need more insights? Read how to be an AI governance champion or check out Deloitte’s blog on the EU AI act.