The holy grail for data scientists? AI governance

I’ve been working at Collibra for almost four years now, which means that I’m proficient in everything that is related to governance. As a Data Scientist, I’m building predictive models with my colleagues. While doing this, I’ve come to the conclusion that something was missing. We lacked transparency on what data was being used, how it was being used, and what features finally made it to the model. Transparency is crucial when building successful data products with the 8 step process to create a data product. Step 6 of this process asks you to document your Data Product. That is why I started developing AI governance within our Collibra platform.

Benefits of AI governance

AI governance has several advantages that can benefit organizations that are utilizing artificial intelligence in their operations. One of the key benefits is the ability to remove the “black box” aspect around AI models, allowing stakeholders to better understand how the models work and make informed decisions about their use. Additionally, AI governance helps Data Scientists catalog their models, allowing them to easily keep track of which models are being used for specific tasks and how they are performing. By implementing governance processes, organizations can also improve their ability to find, understand, and trust the data they are using to train their models, leading to more accurate and reliable results. Furthermore, with increasing regulations and scrutiny surrounding the use of AI, having a robust governance framework in place can help organizations demonstrate their compliance and commitment to the responsible use of AI.

Upcoming AI regulations

The upcoming AI regulations by the US and Europe have made AI governance an even more critical aspect of utilizing artificial intelligence for organizations. In an effort to protect individuals from harmful or unethical AI practices, proposed regulations have been put forth that ban certain techniques. For example, the use of subliminal techniques that could physically or psychologically harm individuals, exploiting vulnerabilities of specific groups, and using AI to evaluate the trustworthiness of people based on their behavior or characteristics have been prohibited. Additionally, the use of real-time remote biometric identification systems for law enforcement in publicly accessible spaces has also been limited to specific circumstances and requires strict safeguards and prior authorization by a judicial or independent administrative authority. It is important to document and verify these prohibitions to ensure that AI models are used appropriately and ethically in various situations. These regulations reflect a growing concern for the ethical use of AI and prioritize protecting individuals from harmful practices.

AI governance in action

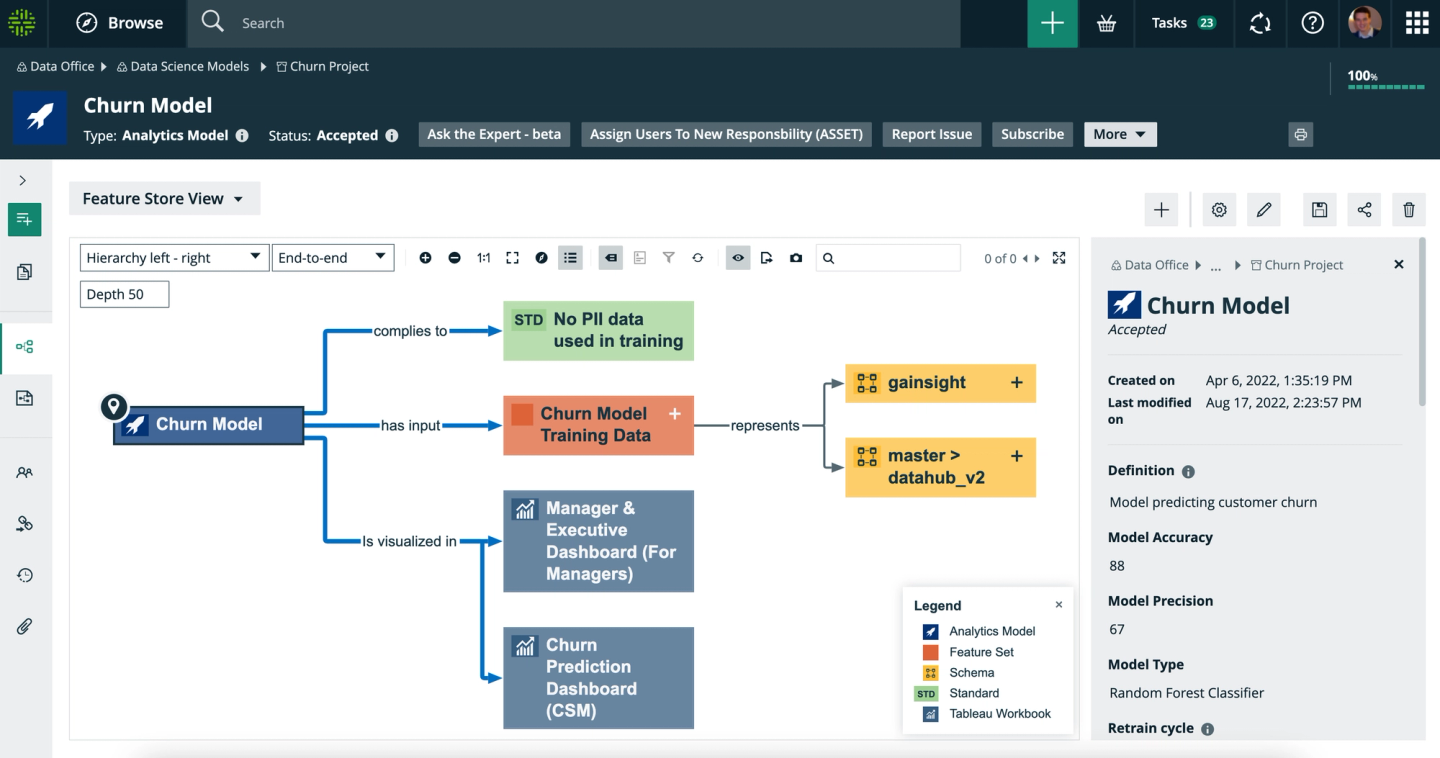

Let’s take a closer look at AI governance applied to an implementation of artificial intelligence, churn. Below you can see how in a blink of an eye you can fully understand the ins and outs of the model. Let’s start on the right (Yellow) where schemas are the basis for the feature set “Churn Model Training Data” (Red). This “Churn Model Training Data” feature set is being fed into the Churn model (Blue).

The Churn model results show up in two dashboards: “Manager & Executive Dashboard” for managers and executives to have a global view on the situation and a “Churn Prediction Dashboard” which is specifically tailored for each Customer Success Manager (CSM).

Remark, that our CSMs were key stakeholders when building the model, via the diagram view they can have a very clear understanding on how the model works, and what features are being used. Also, policies can be added to the model: usage, retention, versioning, auditing, compliance, and deployment policies. We have also included a privacy standard (Green) to illustrate that the Churn model is not trained on PII data, but rather on aggregated data. This allows us to understand the contexts in which this model can be applied.

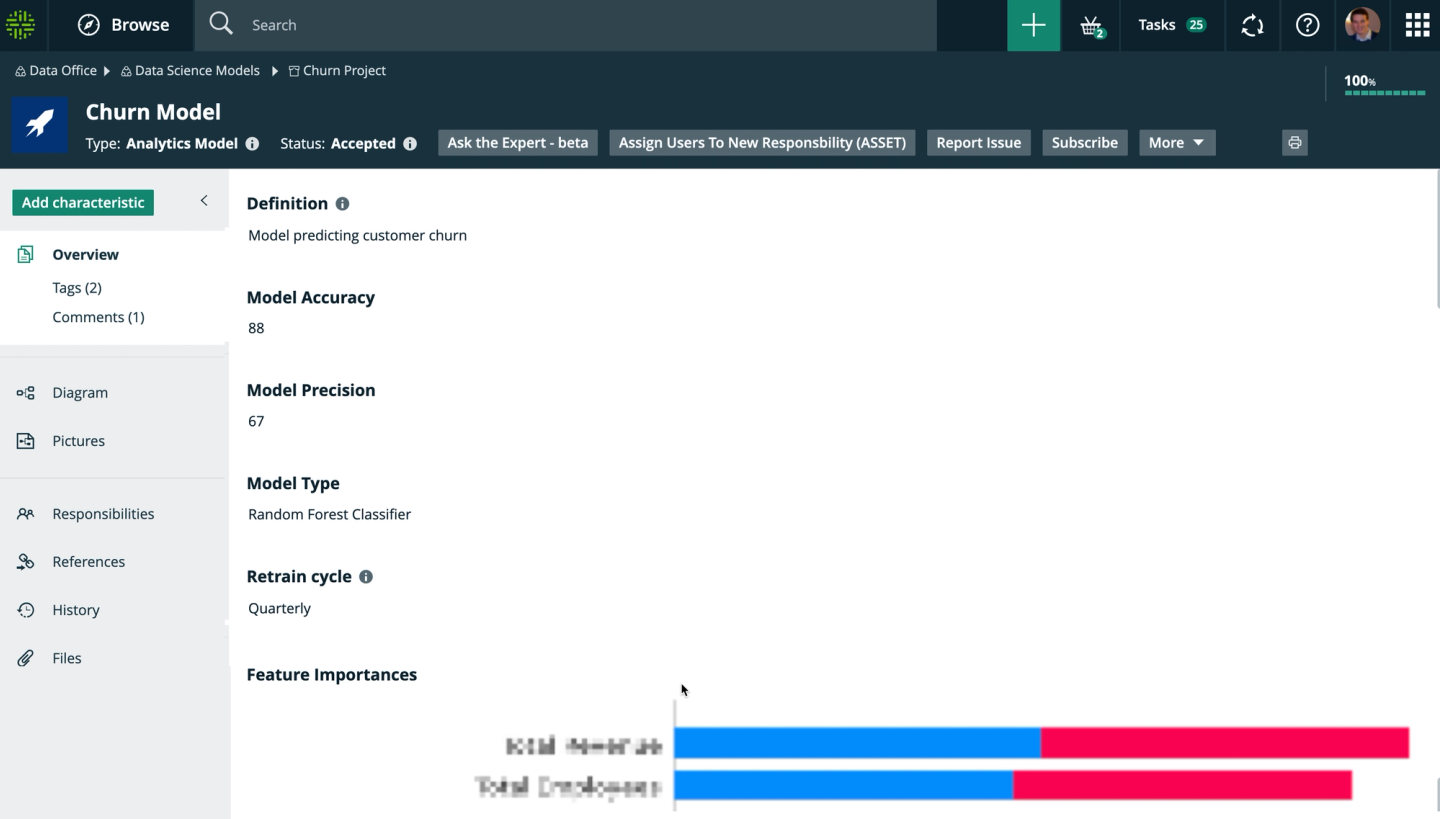

Of course, each of the components contains extra information, I’ll zoom in here on the “Churn Model” asset. Multiple information attributes, such as model accuracy, precision, type, and retrain cycle, are being added to the asset for the benefit of Data Scientists. This will provide a central place for documenting models and allows easy comparison between multiple models as they are released in the coming months, reducing confusion. On top of this, we are also adding information on feature importances (here we did hide the features on purpose). This helps the communication between the Data Product Owner and the Data Scientist.

Why we need a well-documented model

The importance of having well-documented models cannot be overstated. Last year, when my colleague was away on vacation, a model that I had not worked on malfunctioned, and I had to step in. Thankfully, due to the thorough documentation in Collibra, I was able to quickly comprehend the inputs and outputs and how everything was running, allowing me to swiftly resolve the issue and get back to my tasks.

Conclusion: The future of AI governance

The future of AI Governance is looking promising with advancements in technology such as GPT-3 and its ability to translate complex concepts like “Shap Values” (feature importance indicator) into natural language as was done in my blog. This will make it easier for people to understand, communicate and use technology in their daily lives. Additionally, the development of shoppable feature sets for data scientists will increase efficiency and productivity in their work. Another critical aspect of the future is the use of Collibra Trusted Business Reporting for models, which will ensure the accuracy and reliability of AI-generated predictions and decisions. Overall, the future of AI is exciting and holds great potential for improving various industries and aspects of our lives.

Related articles

Data GovernanceFebruary 14, 2025

Why we are the data and AI leader for you

Data GovernanceJuly 28, 2025

Your new shortcut to trusted data: Collibra’s two-way Slack Integration

Data GovernanceMay 24, 2022

The journey to data catalog and governance success: A customer perspective

Data GovernanceOctober 12, 2023

Improved user provisioning leads to calmer seas and smoother sailing

Keep up with the latest from Collibra

I would like to get updates about the latest Collibra content, events and more.

Thanks for signing up

You'll begin receiving educational materials and invitations to network with our community soon.