AI governance versus model management: What’s the difference?

The world of artificial intelligence (AI) is chock-full with so many buzzwords and nomenclature that it’s hard to keep track of what’s really been said. It also causes a lot of confusion for those that aren’t steeped in this world on a regular basis, and even for those that are, there’s often disagreement on terms or phrases. Case in point — AI governance and AI model management. Both seem pretty straight forward, but there’s a great amount of confusion between the two, so it’s time to set the record straight.

Why do people get these terms mixed up?

A major factor in the confusion lies in not understanding the three main different approaches to AI governance. The first approach, and the one that isn’t a culprit of misunderstanding, is the compliance-centric approach. This approach, led by Legal and Risk teams, aims to ensure AI compliance with laws and regulations by fully documenting and auditing the use of AI. This approach, while certainly important, only scratches the surface of an AI use case — AI compliance is only one dimension of an AI project.

The next approach, the most technical of the three, is the model-centric approach. This flavor of AI governance helps AI and data teams implement AI use-cases by preparing, developing, running and monitoring AI models. Data scientists build AI models and work in MLOps platforms, where they implement best practices to manage the model.

The third approach is the data-centric approach. This takes the best components of the compliance-centric and model-centric approaches, helping data, AI, legal and risk teams deliver trusted AI by providing easy access to reliable data and implementing appropriate controls across the AI use-case lifecycle. You’ll notice in the first two approaches, major groups/teams are left out of each, creating an incomplete approach to AI governance. With the data-centric approach, teams from across the organization are gathered to bring their expertise and perspective to AI use cases and projects, including:

- Expertise from data teams to provide trusted high quality data

- Expertise from AI/Data Science teams to build and manage AI models

- Expertise from Legal and Risk as well as Security to understand compliance requirements

- Expertise from critical business stakeholders to understand, approve, and implement the use-case

In the past, when organizations thought about AI, these perspectives came from the Data Science team exclusively, which as we saw in the model-centric approach revolves around… AI models! So when Data Science teams think about AI governance, they think model management. It’s also important to understand that the AI model is not the entire AI use case, but rather only a single component. An AI use case is comprised of:

- Business context (including the business impact/ROI)

- Legal/compliance/ethical information

- Data that feeds the model

- The AI model

- Impact of model decisions

- Roles/responsibilities/accountability of owners

So what’s the difference between AI governance and model management?

Depending on who you talk to, you’ll likely get a variety of definitions for AI governance. Collibra defines AI governance as the application of rules, processes and responsibilities to drive maximum value from your AI use case by ensuring applicable, streamlined and ethical AI practices that mitigate risk, adhere to legal requirements and protect privacy. Notice that “AI use case” is the subject, not the AI model.

Model management, an element of MLOps, ensures that data science best practices are followed when building and maintaining an AI model throughout its lifecycle.

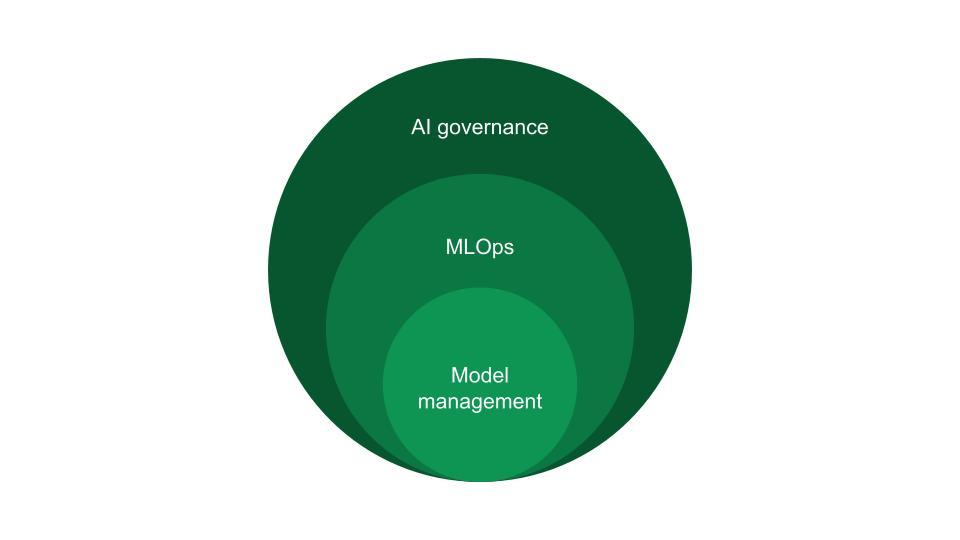

Based on these two definitions, if we wanted to visualize the relationship between AI governance and model management, it would look like the below. AI governance is the all encompassing practice of ensuring AI use case success, with MLOps being an important part of that overall process and model management being a component of MLOps.

Which does Collibra provide?

Collibra provides a purpose built solution, Collibra AI Governance, that helps organizations deliver trusted AI with full visibility and control, while ensuring the use of reliable data, across any tool, for every AI use case. Does Collibra also handle model management? No! That’s what MLOps platforms are designed to do and they do it really well. Does Collibra connect to MLOps platforms? Of course! Just like any other integration with a data source, Collibra can ingest metadata, in this case the AI model metadata, and expose that information for better decision making and understanding around an AI use case. Collibra can also help organizations create a catalog of all of their AI models, even assigning them specific statuses like candidate or accepted, to let users know which models they are able to use along with a host of information about the model. For example, with our integration to AWS Sagemaker, organizations can synchronize AI model metadata like:

- Model Accuracy

- Model Precision

- Model Type

- Version

- The Description from source system

- And more

This is all critical AI model information that wouldn’t be possible without the MLOps platform and will help AI governance stakeholders make better decisions about a use case(s) that the model may be part of.

AI governance takes a village. It’s no longer only the domain of Data Science teams huddled in an innovation lab. Everyone from Legal to business users has a role to play, and while data scientists will continue the management of AI models themselves, AI governance is a practice to be taken up by a cross-functional team. To learn more about AI governance or see Collibra AI Governance in action, visit collibra.com/ai-governance.

In this post:

Related articles

AIMay 21, 2024

Collibra wins prestigious 2024 Communicator Award for AI Governance campaign

AIJuly 15, 2024

How to observe data quality for better, more reliable AI

AINovember 13, 2023

AI ethics and governance: responsibly managing innovation

AI GovernanceFebruary 28, 2025

AI agents: Build or buy, governance remains critical

Keep up with the latest from Collibra

I would like to get updates about the latest Collibra content, events and more.

Thanks for signing up

You'll begin receiving educational materials and invitations to network with our community soon.