AI technologies are everywhere these days. We’re thrilled by the tidal wave of interest because it perfectly aligns with our core mission. As we speed into a new AI era, there’s a critical element that’s often missing when organizations rush forward in hyper-competitive markets to build scalable, trusted AI programs — and that’s AI governance.

Every day I hear stories from CEOs who want to spin up AI programs simply to keep pace with the competition. It is very exciting to see senior executive interest, sponsorship, and urgency on the topic. Before you get too far into your AI journey, you’ll want to ensure your team is utilizing an AI governance framework, and that’s what I’ll focus on in this blog.

To a large degree, AI governance is an extension of our data governance efforts tested many times over with organizations around the world. What is an AI governance framework?

An AI governance framework offers a blueprint for how to create successful AI products. It is a map to a repeatable process for driving long-term, reliable AI programs.

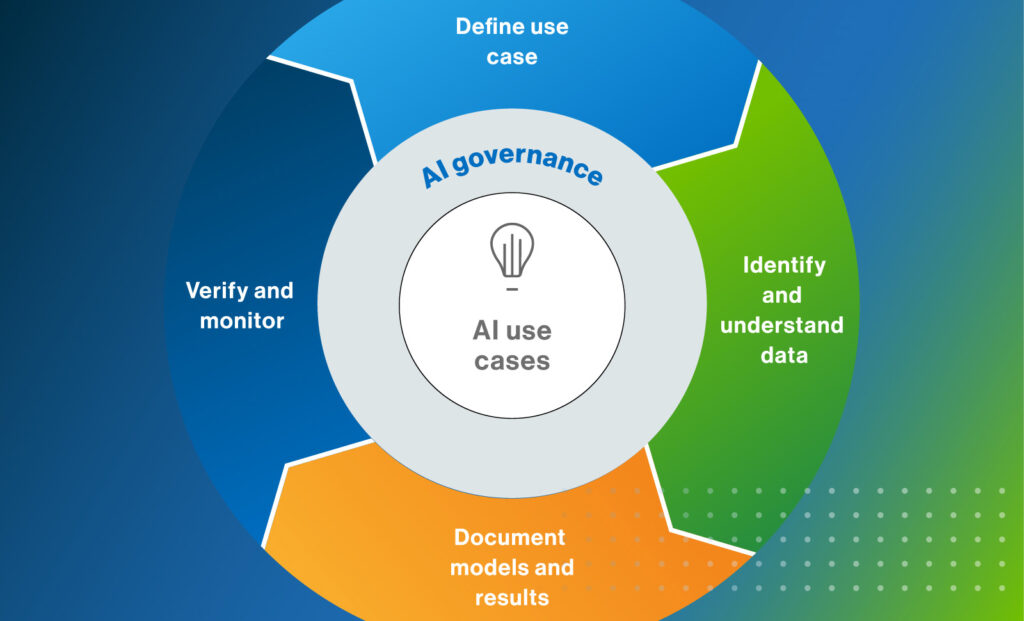

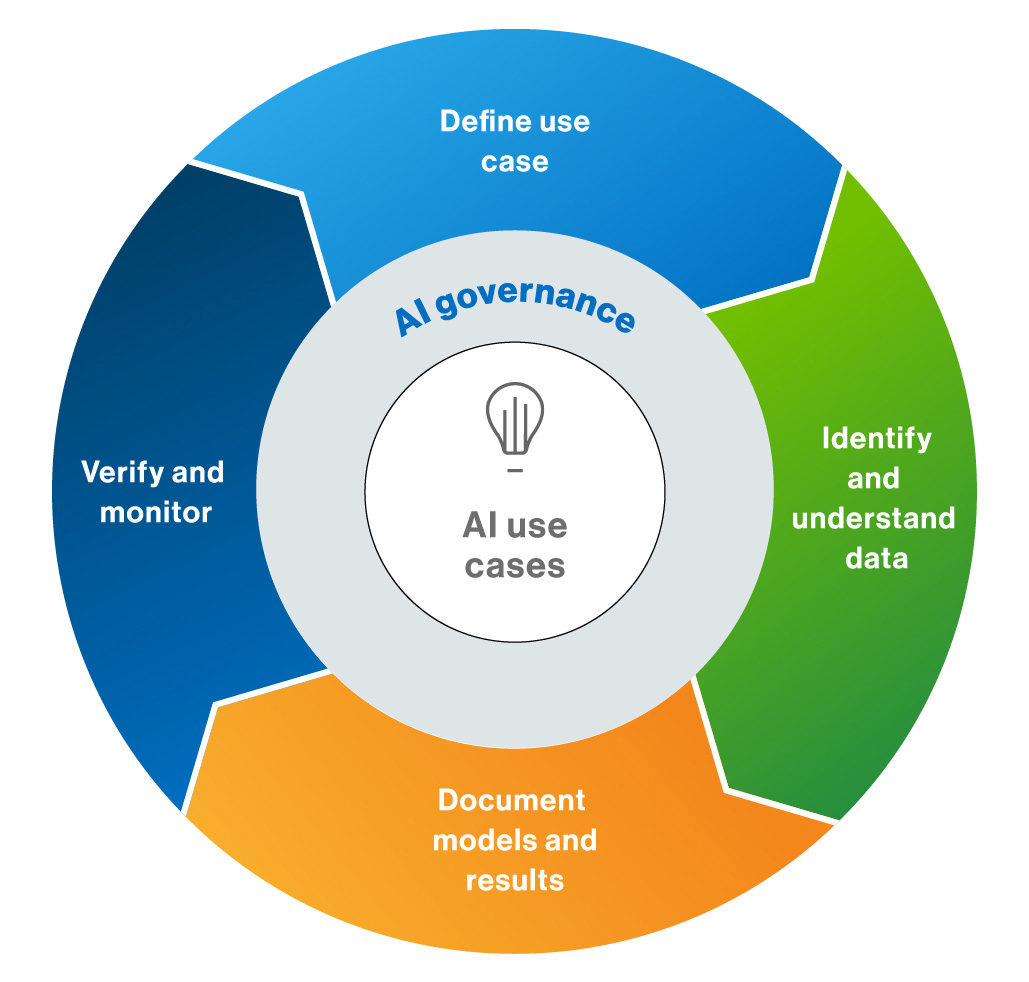

Our AI governance framework: A proven 4-step process

If you want to ensure the responsible use of AI, AI governance frameworks provide a set of principles and practices for governing its development, deployment and use. Our framework is informed by our definition of AI governance:

AI governance is the application of rules, processes and responsibilities to drive maximum value from your automated data products by ensuring applicable, streamlined and ethical AI practices that mitigate risk, adhere to legal requirements and protect privacy.

Clearly, there is a strong link between driving maximum value and effective governance; in other words, the relationship between the ROI of your AI efforts is directly correlated to successfully navigating the risks posed by AI.

As shown in the figure the four parts of the Collibra AI Governance framework are:

- Define the use case

- Identify and understand data

- Document models and results

- Verify and monitor

Let’s explore each of those steps.

1. Define the use case

Your first step in the AI governance process is to define your use case(s): what exactly is it that you want to do with AI?

Your use case is a description of the specific need that AI can address. It should clearly define the problem that the AI model solves, the data used to train the model, the desired outcomes, and the personas involved.

It’s important to define your use case before building an AI model because it helps ensure you’re actually solving the right problem. It also helps ensure that the data you’re feeding the model is relevant, necessary, accurate and up-to-date. Finally, with a use case in hand and realistic outcomes, you’re in position to understand how to measure success.

You can define a use case in a few different ways:

- Problem: What is the business problem you want to solve with AI?

- Outcomes: What does success look like?

- Timing: When do you need your AI product to go live?

- Model and data: What model and data will be used? Is the data protected information (like PII data)?

- Ownership: Who is ultimately responsible for the project?

- Risk: What potential risks exist, and how sizable are they?

This step is crucial because it enables you to evaluate and prioritize your roadmap before you invest any resources to build an AI system.

If you’re in financial services, maybe you’re considering how to incorporate AI into fraud detection, or personalized customer service, denial explanations or financial reporting. If you work in life sciences, you might be looking into applying AI to drug discovery, clinical trials, patient engagement or prosthesis design. For public sector efforts, you might think about AI for citizen services, knowledge management, content generation or fraud detection.

Even if your AI initiative is already underway I still recommend starting with Step 1. After all, it is very likely that you’ll be asked about your library of AI use cases – either by your executives, by your compliance colleagues, by the market, or by regulatory agents.

2. Identify and understand data

Now that you’ve worked through Step 1 of the AI governance framework, you have a starting point for your library of use cases and your scope for identifying data lineage, which leads directly into Step 2: identifying and understanding the data to train or tune the AI model.

To do so, you’ll need to collect and assess the data that’s available. You’ll want to assess whether it’s high-quality data or not. And you want to understand if the use of the data is legally permissible and within your organization’s policies.

For example, if your use case is fraud prevention and your data set includes personal data, you probably have the right parameters to move forward (with the proper guidance from your friends in compliance.) But if your use case involves sentiment analysis and your data set is support tickets — and the customer information is NOT anonymized — then it’s probably not a viable use case. As a rule of thumb the potential value should outweigh the risks.

Usually, this step involves reaching out to relevant data owners. Maybe it’s your data science colleague who has a store of transactional data. Or your data analyst friend in Finance who manages a database of customer accounts. Once you’ve worked with the domain-specific data expert to identify specific data sets, you’ll want to understand more about the quality of the data set, its completeness, its classifications, whether it contains personal data or not etc.

Learn how a Data Marketplace helps you identify and understand data for your AI use cases.

3. Document models and results

By the end of Step 2, you’ve reviewed perhaps a dozen data sets and maybe a handful are qualified. Now, it’s time to start training your model.

Step 3 involves leveraging your data and creating a model that you can:

- Document

- Trace

- Track

This is where a data scientist will focus most of the project time. Perhaps you’ll start by creating a smaller sample for the data and run basic algorithms on your laptop or a cluster. You might spin up a training model using Python to quickly validate your results with your business stakeholders. Maybe you realize you need another data set. Maybe your model needs tweaking. This is a very iterative process.

Remember, your model analysis is an effort to get some initial results from the model before you move into production.

Ultimately, you’ll land on a model that passes scrutiny, that you’ve validated with the business, and that’s ready to become your AI data product. And once this step is complete, you’re ready for the hard step of moving into production.

4. Verify and monitor

This step is the all-important transition that you’ve been working toward since you defined your AI use cases. You’re ready to go live and release your amazing application to the world (or your internal audience).

The 4th step includes the work to:

- Verify your model

- Move the model from its sandbox into a production environment

- Continuously monitor to ensure the quality and compliance of the underlying data products

- Retrain, test and audit models regularly

Necessarily, the models going into production have already undergone a lot of scrutiny by your data scientists and key stakeholders. But as you move into launch, and despite all your best efforts, your model will still produce errors, for example because of data drift. You’ll want to regularly check the data that’s produced to ensure there’s no inaccuracies, hallucinations, biases, etc.

The model and how you control it is going to be a very important starting point.

If you identify problems with the output, then you’ll use the documentation from Step 2 to identify where the problems originate. You’ll be able to connect the dots from your model to your data set to your use case. And you can reliably tweak your entire AI pipeline to ensure that — from your use case to your data set to your model — you’ve course corrected if necessary.

The truth is that AI products — and generative AI products in particular — are hard to build (and often expensive). For a prototype you can quickly call an API and show something interesting, but to get something valuable into production takes a lot more effort.

Putting a model into production is hard for 2 big reasons:

- It’s technically challenging because there are a ton of components involved.

- The model needs to scale in production – and your business needs to adapt to it.

If it’s going to touch all of your customers, you may be scaling to millions or hundreds of millions of users. Even if only a small fraction experience ‘hallucinations’ or another generative AI error, you are still faced with significant risks. By applying a robust AI governance framework you’ll mitigate and minimize risks.

Why AI governance is essential

AI governance is essential for organizations that need to ensure AI products are using trusted data — not just for training models, but for maintaining trusted outputs.

At Collibra, we’re powering organizations that need the highest-quality data for the highest-quality AI. With active metadata at its core, Collibra Data Intelligence Cloud serves as the foundation for your AI strategy.

While every industry will face increasing ethical and privacy challenges in their rush to implement AI, heavily regulated industries like financial services, life sciences, and the public sector will encounter even more daunting challenges to compete and, at the same time, govern their AI initiatives. The speed at which organizations in these industries can interact with customers, discover new drugs, or detect fraud will become staggering. And so will the risks.

As you speed toward launching AI products, I urge you to consider AI data governance as not only a risk management and mitigating element of your efforts but as a catalyst for creating scalable, trusted AI.

At the end of the day, you and your organization are responsible for what you do with your AI’s output. You’ll find it hard to point fingers at some cute chatbot.

Our AI governance framework provides a best practice to build on. To continue your exploration of this topic, I invite you to read my overview on the AI governance conversation as well as my thoughts on the data- versus model-centric debate. You can also unlock the on-demand session of my co-founder Felix Van de Maele speaking about AI governance framework.

Until next time, I wish you the best in your AI journey.