The availability and use of trustworthy data is critical for asset managers – whether it’s for financial planning, portfolio management, advisory services, research, investments, regulatory compliance, or financial reporting.

Yet most asset managers will attest that managing and harnessing value from their data and ensuring its hygiene are some of the biggest hurdles they deal with. According to Accenture, asset managers need a ‘data reinvention.’ In a survey conducted by the consulting firm, nearly 66% of asset managers responded that ‘data management’ or lack thereof was a top priority at their companies.

The reality is that asset management firms operate in a highly complex data landscape with petabyte-scale data residing across hundreds of disparate data sources. Data silos, lack of data standards, poor quality, and ungoverned data (data that is neither verified nor certified) have been a growing problem for asset managers. This type of environment can quickly become a fertile ground for elevated exposure to operational, regulatory non-compliance and reputational risks – all leading to potentially dire consequences. The ramification of a weak data management strategy can be far reaching.

Need for comprehensive and modern data management

At its core, asset management to a large extent is about risk management. And risk and regulatory compliance are tightly intertwined. Combined, they impact every aspect of an asset manager’s operations.

Virtually every regulation – from BCBS 239, BSA/AML, MiFID II, FRTB, and more – entail financial institutions to aggregate massive volumes of data from disparate internal and external data sources for analysis. It requires implementation of a comprehensive data management, governance and data quality strategy to guide risk measurement, regulatory rules and reporting guidelines.

Regulatory authorities no longer rely on aggregated results that are in reports. Increasingly, they want granular details to ensure that the right data governance, data quality and data protection practices are in place. They want audit trails that demonstrate that the underlying data that’s used for analysis, back-testing and reporting purposes is of the highest quality, and that the data can be traced back to its origin with detailed information if required.

Fundamental review of the trading book (FRTB)

For instance, FRTB is one of the most consequential regulations to come into effect in 2023. It will have long lasting implications on banks and asset managers globally. It will require financial institutions to:

- Measure the risk associated with their trading books and raise their capital reserves to support those risks.

- Enact a rigorous data management, data governance and data quality strategy to enable and support FRTB mandates.

- Create a new data taxonomy as well as ensure adherence to stringent requirements for data lineage to trace data sets, models, and risk calculations with comprehensive audit trails for reporting purposes. Moreover, it will require the creation of risk and portfolio management workflows that include obtaining necessary reference, classification, and pricing information for each holding, as well as computation and aggregation of exposures.

- Enforce greater disclosure and transparency of market risk capital charges including capital ratios that are calculated – using both standardized and internal models as applicable.

To ensure adherence to FRTB and other regulations, asset managers will require a comprehensive and modern approach to metadata management by leveraging advanced automation that’s powered by machine learning to catalog, govern, cleanse and protect their data at scale.

Accelerate data democratization and self-service analytics

Fully governed and high-quality data that’s easily discoverable and traceable is the lynchpin to democratizing data and enabling self-service analytics at scale.

According to Forrester Research, nearly 65% of data goes unused by enterprises for analysis. This is in large part because most enterprises don’t know what data they have, users are unable to easily find the data they need or validate it in a timely manner.

With Collibra, asset managers can empower their data citizens (including line-of-business users such as portfolio, risk and investment managers) to easily discover the data they need.

- For instance, investment managers can rapidly discover validated and certified data with detailed information about the data as well as trace its lineage when modeling and analyzing credit, liquidity and market risks.

- They can leverage popular and widely-used business intelligence (BI) tools such as Tableau in conjunction with Collibra Data Catalog to obtain a more complete view and understanding of the data that’s used for analysis at a granular level.

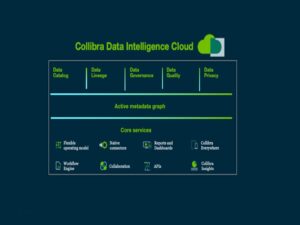

The Collibra Data Intelligence Cloud advantage

At Collibra, we work with many of the leading financial institutions to help them derive value from their data. Asset managers, retail and investment banks, fintech and Global Systematically Important Banks (G-SIBs) rely on Collibra Data Intelligence Cloud to:

- Rapidly catalog all their data regardless of where it resides to create a single, trusted repository of metadata (data about data). The catalog offers advanced capabilities that are purpose-built to empower users to easily find and understand the data they need with rich business and technical context at a granular level.

- Enact a comprehensive and adaptive data governance strategy. With Collibra Governance, asset managers can speed the automation of complex policies, rules and guidelines that enable them to ensure adherence to a myriad of regulations. Pre-built templates, out-of-the-box data domains, glossary of terms, data usage registry, physical and/or logical data dictionary, reference data management, stewardship management and intuitive workflows provide a framework for cross-functional teams to establish a common understanding of data to ensure consistency.

- Address data quality and privacy issues to ensure data integrity at scale. For instance, Collibra predictive data quality and observability leverages machine learning (ML)-enabled capabilities to proactively detect anomalies in data such as missing records and values, as well as broken relationships across tables or systems resulting in rapid resolution. Moreover, advanced capabilities in data protection helps ensure classified data is easily identified and fully protected with role-based access control.

- Obtain visibility throughout the data supply chain with an active metadata graph and end-to-end data lineage, enabling users to easily visualize the flow of data from source to target, as well as understand all the data dependencies at a granular level. For instance, with end-to-end data lineage, users can better understand how risk models are computed and trace lineage of each data set with rigorous audit trails for reporting purposes.

Next Steps:

To learn more, I would like to invite you to watch the following webinars and videos: