In this post, we will be providing a practical walkthrough for ROI in your data quality program. When kickstarting data quality with different organizations we’ve discovered this is a common need. Questions include, ‘how many columns or assets should I govern’ and ‘how many require data quality checks’? As a governance team, we are often sizing up the landscape of large enterprises to answer these questions. A common example would be something like 100 databases, each of which has 30 schemas, which has hundreds of tables, and in turn have 90 columns on average. So can you govern 81 million columns?

Math check (100 dbs * 30 schemas * 300 tables * 90 columns). 81,000,000

With the task of governing 81 million columns, let’s reflect on the challenges. The sheer volume of data quality (DQ) metadata that needs to be managed and the journey to effective governance can be staggering. Just as enterprises typically initiate their data management strategies with Critical Data Elements (CDEs), there’s a valuable lesson to be learned in leading a data quality journey that is scalable.

Effective scaling requires a careful approach, prioritizing areas of high impact and aligning with the organization’s capacity to manage and fix data reliability issues. This approach helps governance and quality efforts to remain actionable, sustainable and aligned with the business’ overarching goals.

Getting started with effective data quality

When dealing with trust in underlying data, it is important to start off small. Don’t boil the ocean. Rather, start by asking yourself three simple questions for successful ROI with data quality.

1. If your data is 99% accurate with only a 1% error rate, could your remediate 8.1M issues a day?

- Our view is that you could potentially report them but not likely have the capacity to take the proper action. This will break the balance. If ROI is truly important, we would be setting ourselves up for failure.

2. Could you manually write millions of DQ rules? What level of human effort would this take?

- While Collibra’s Data Quality automates most rule writing, understanding and actioning millions of automated rules is not necessarily the optimal approach and should be considered carefully.

3. Even if a product can handle a certain number of daily incidents, do you have the people and process to do so?

- This is perhaps the most important question to consider. Let’s look at an example below to see how a focus on the CDEs helps us manage an initial implementation for data quality.

Awareness vs. usage: governed & critical data elements (CDE’s)

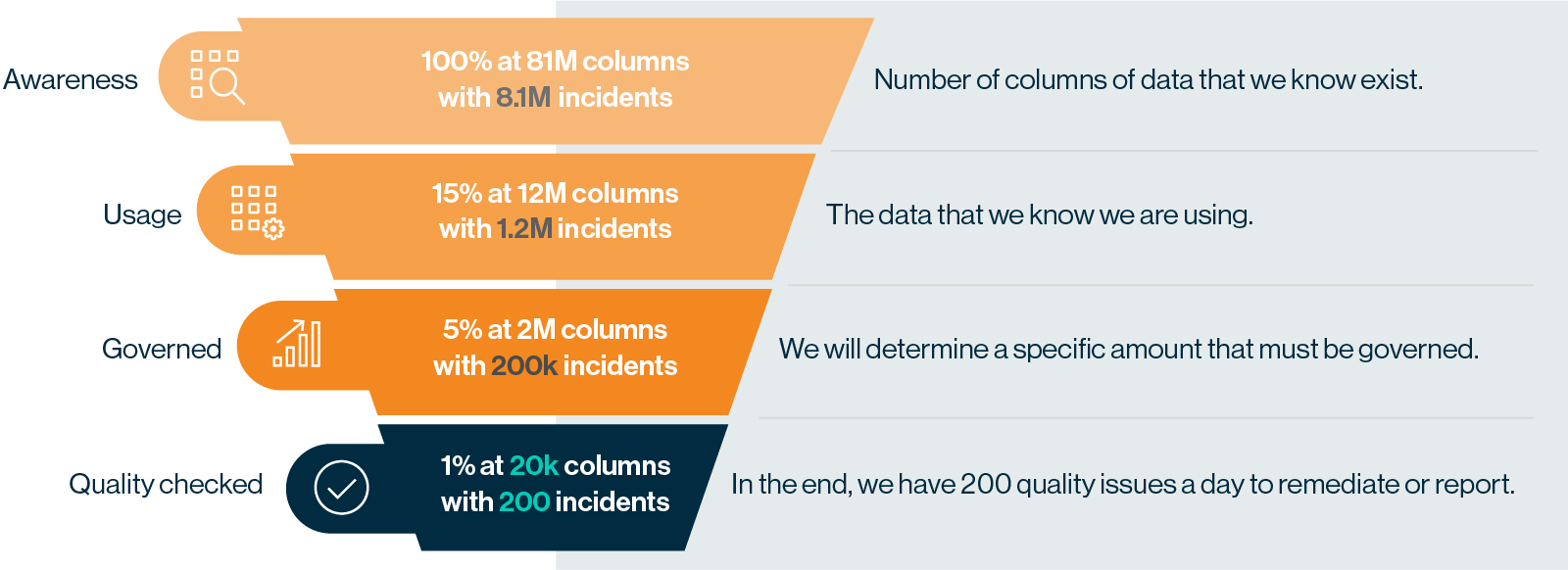

The diagram below is a quick guide to how a large enterprise might truly operate a healthy quality and governance program for teams to start with. In the current scenario, we recommend narrowing down to 20 thousand columns under a DQ program, allowing you to grow after demonstrating where data health issues are and the next steps toward action.

With the right solution you can index or catalog tens of millions of columns. However it is important to keep in mind that each column contains millions of rows of data. A reasonable expectation is around a 2% error rate per column. This leads to the question: do you have a team to fix the errors? It will be costly to actively monitor 81M columns. You will want to actively govern and run quality checks on data you plan to report or potentially action.

While we of course want to help companies aspire to 100% data quality, it is important to start with wins in data governance, demonstrate value, and scale up. Starting with highly used, critical data is key to showing high-ROI.

People, process and product

Even if a product can handle a large number of daily incidents, do you have the people and process to do so?

At Collibra, we have seen outcomes that adeptly scale to accommodate any number of columns. However, the real challenge lies in whether the human and procedural infrastructure can match this technical capability. While the product may sift through 81 million assets, the success of all this ultimately hinges on the people and processes in place.

This revolves around a unified strategy among data stewards and leaders. These should be streamlined and consistent, avoiding fragmentation with changes in leadership and organizational structure. Addressing this requires a flexible, use-case-oriented approach, capable of evolving over time and aligning with the organization’s shifting priorities.

With people, the shift from traditional methodologies to agile digital frameworks has been marked by processes and tools, like the Software Development Life Cycle (SDLC) and Jira. These frameworks are widely accepted due to their proven effectiveness. Yet, the adoption of these frameworks is often slow, due to a cultural mindset. The transition from ‘how long will it take to complete everything?’ to ‘what can be accomplished in this sprint?’ is significant. It’s a shift in the time-value-money equation that may take additional time, but in the long run has massive potential for ROI.

In summary

Flooding the system out of the gate with excessive data quality tasks, will lead to counterproductive results early on, generating more confusion than clarity. In the end, it’s about striking the right balance, ensuring that the team is not overwhelmed by the scale of data quality efforts. These should enhance, not impede, the decision-making process. To foster a culture where data quality is integrated into daily workflows, you need a system that helps you narrow down to what is meaningful and manageable. This will transform data quality management from a daunting task that no one could possibly tackle to a seamless and attainable aspect of the data governance fabric.