Imagine.

You’re a technical lead or a data analyst at a global bank.

Your bank relies on real-time data feeds — such as interest rates, housing data, stock data, and sensitive customer information — to make critical financial decisions and adjust loan prices.

If the Federal Reserve raises rates overnight, and your systems fail to check the freshness of the interest rate data feed, you may end up providing loans at incorrect rates.

Moreover, if a technical glitch occurs, causing feeds to stop loading or carry partial loads, you’ll see missing, partial, or incorrect data used by downstream applications. Your bank might end up making inaccurate stock trading decisions or sending out incorrect customer communications.

Even worse, data downtime events like these can irreparably damage reputation — yours and the bank.

Customers, investors, and regulators might lose trust in your organization’s ability to effectively manage risk and maintain the integrity of your data, potentially leading to a decline in business and more stringent regulatory oversight.

What’s the root cause of so much potential damage? Stale data.

The truth is stale data is not only bad for business, it’s embarrassing. Not only to the organization, but to the data professionals responsible for ensuring the data is up to date.

Luckily, you can stop stale data and ensure you and your organization avoid damage to your reputation. To do so, we need to use data observability to monitor the freshness of our pipelines and alert to stale data.

Do you want to be notified in Slack or from the CEO?

No one wants to get bad news from the boss, especially dataOps and Engineering teams.

You want early warning notifications via email, Slack or Teams so you can resolve issues before the effects of stale data move downstream and create inaccurate BI reports, undermine the customer experience, and worse.

To achieve early warning detection, you need something in place that can constantly monitor what normal looks like across all data feeds and alert you when things look abnormal.

This type of monitoring is commonly referred to as data observability (and we offer Collibra Data Quality & Observability).

Data observability is exploding in popularity across enterprises globally because of the dramatic business impact a Stale Data event can have — and, above all, the costly damages that can be avoided if issues are identified and resolved within a few hours, instead of a few days.

Monitor 1,000s of pipelines

A data outage can be quite obvious on a single data feed level.

However, the problem becomes more complex when trying to manage hundreds of data feeds across different users, permissions, and business units. As is often the case, the feeds flow from a downstream system to a cloud database, only increasing the complexity and potential damage.

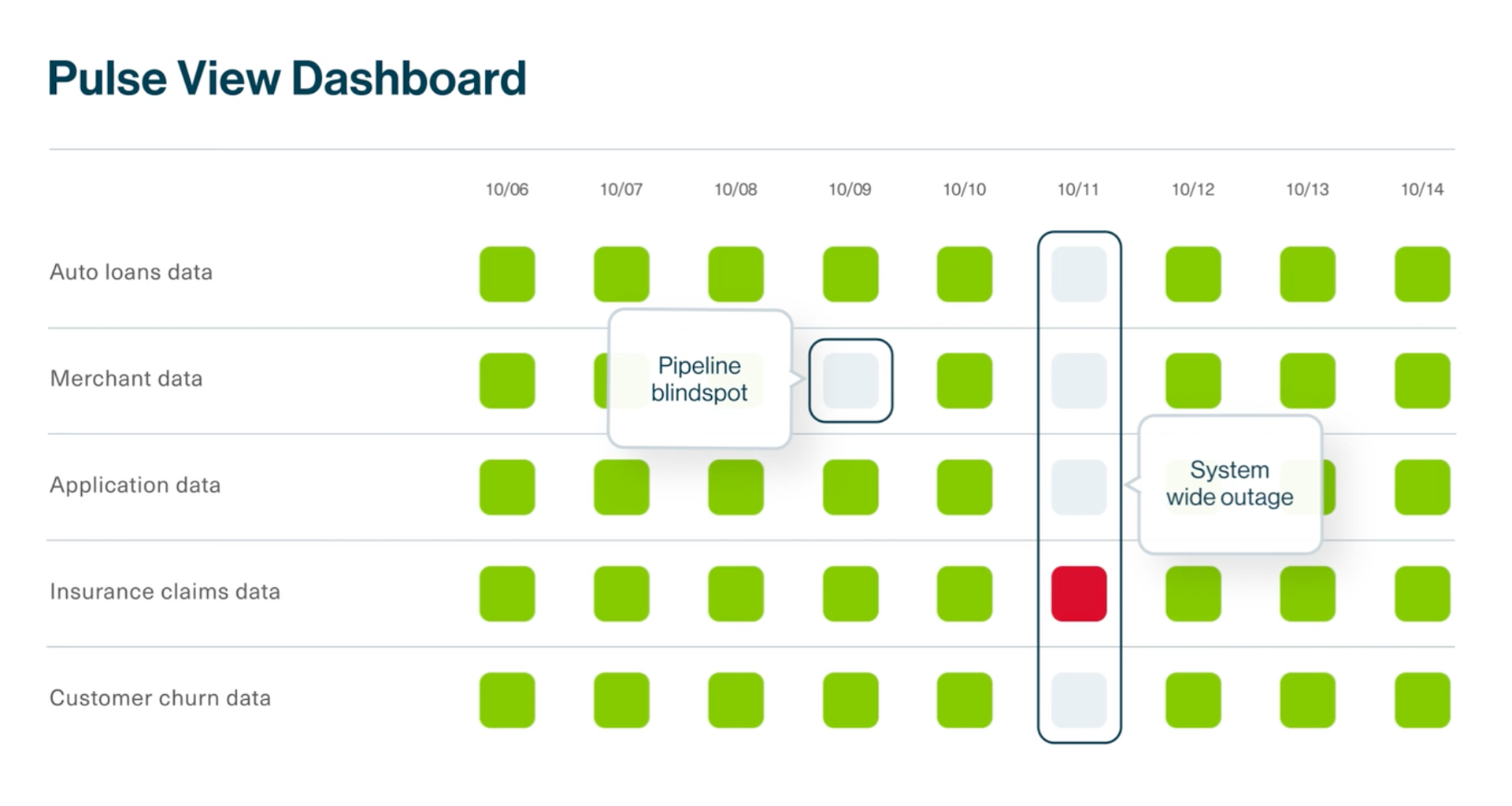

Collibra Data Quality and Observability simplifies seeing the big picture as well providing a drill down into more detailed, single data pipeline views.

In the screen grab below, you can see how the Pulse Dashboard provides a heat map view of data pipeline performance.

Collibra helps you see inside data pipelines to reveal blind spots in your data operations.

Write a Stale Data check

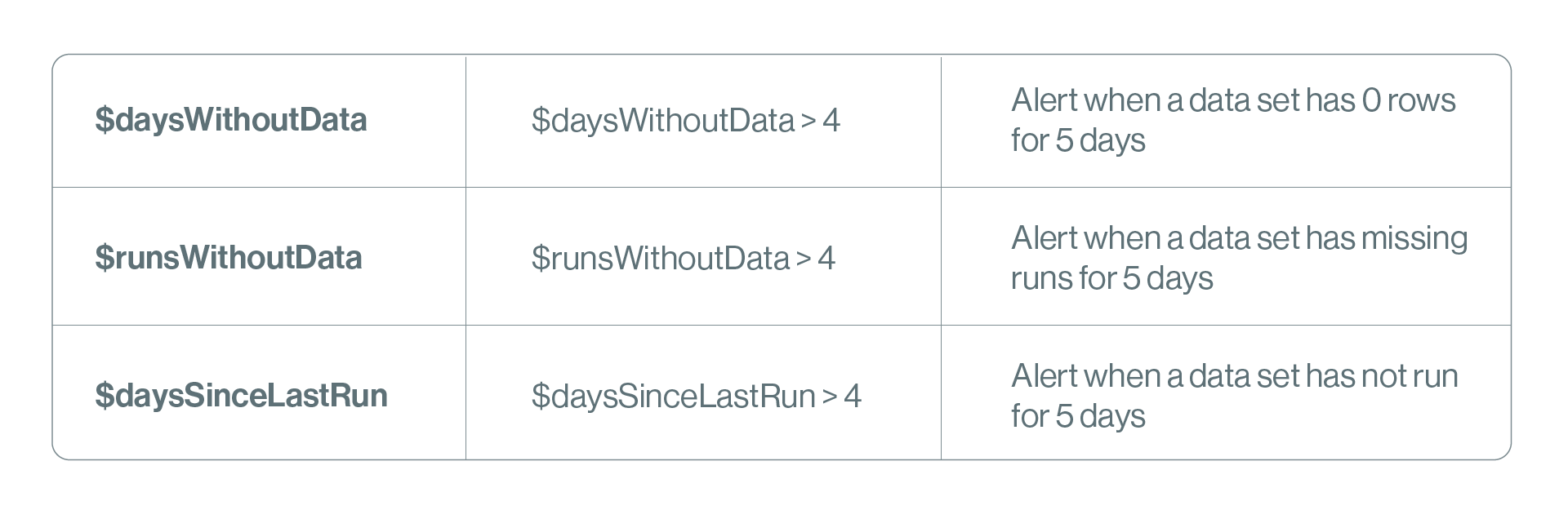

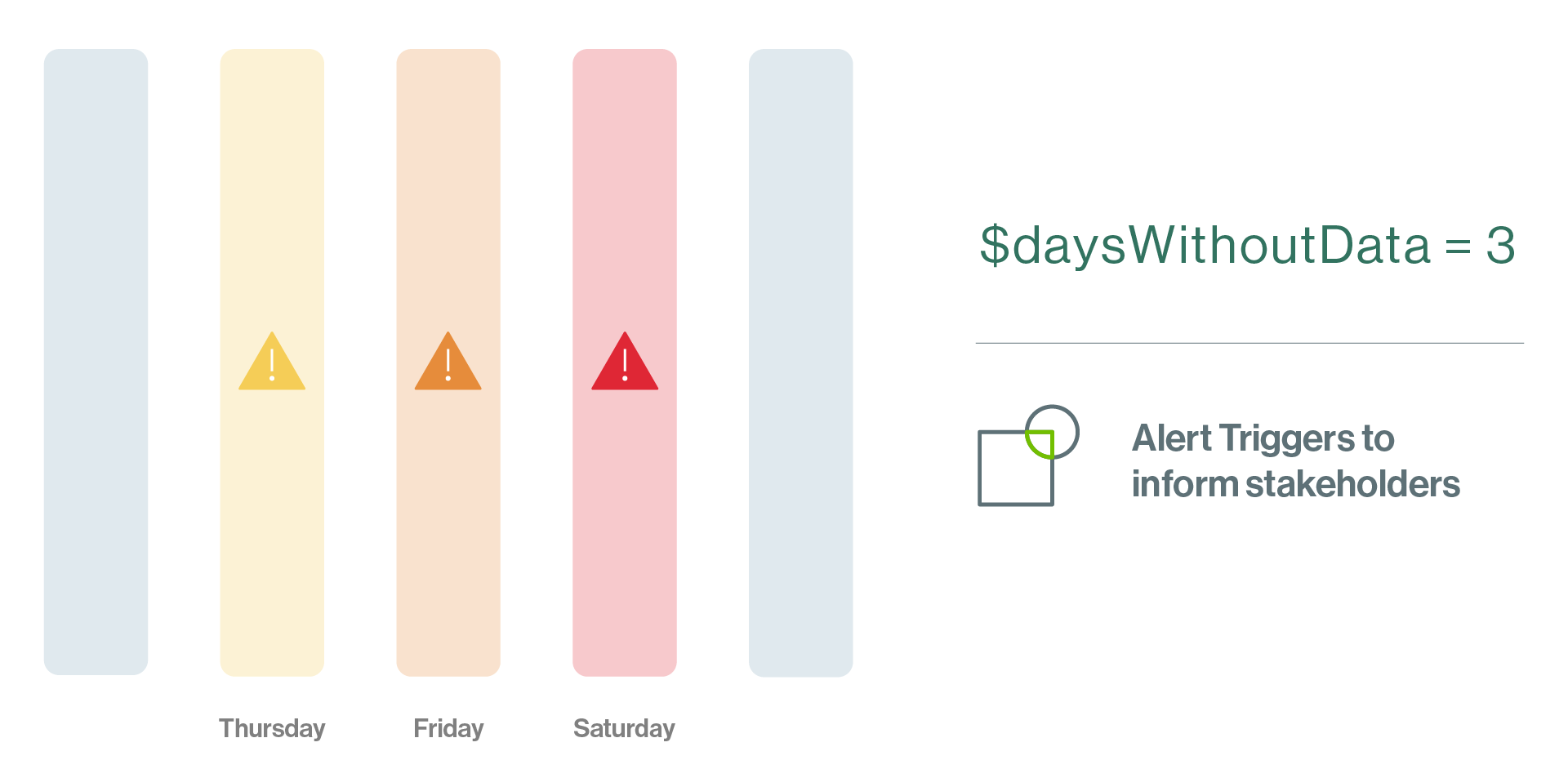

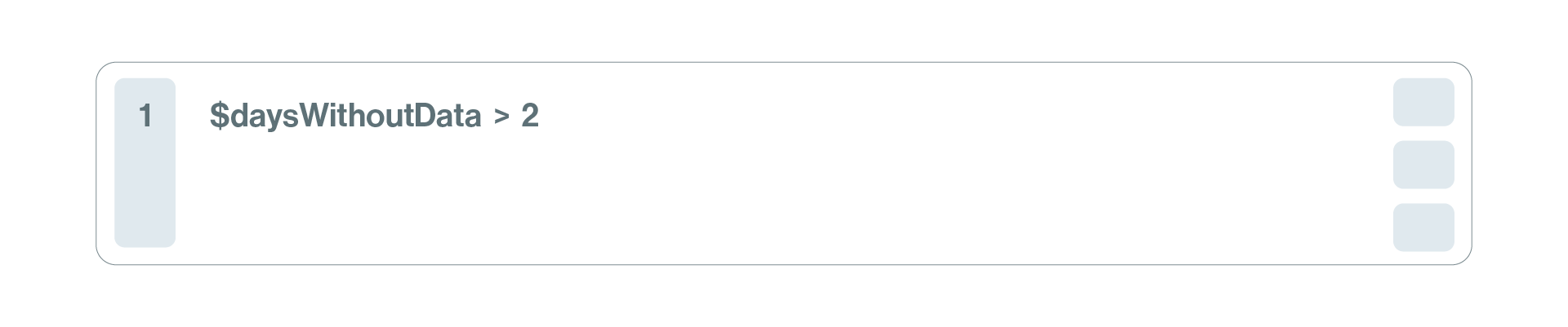

Sometimes, a data engineer or analyst may want to manually write a Stale Data check.

For convenience, the Collibra Data Quality & Observability offers a SQL editor with shortcut variables called “stat rules,” which gives you full control at the dataset level and the ability to define unique conditional cases.

Three tips for maximizing data freshness

How do you get the most out of Collibra and effectively monitor data freshness? Here are a few tips.

- Implement a data quality monitoring framework: Establish a structured approach to evaluating and maintaining data quality. Define clear data freshness metrics and thresholds. Determine which data is considered fresh and which is considered stale.

- Utilize real-time alerts: Leverage Collibra’s real-time alerting capabilities to receive instant notifications when data freshness values fall below acceptable levels — and resolve issues faster.

- Assess relevance and availability requirements for analytics: Evaluate the specific needs of your analytics processes to establish a realistic freshness range for all your organizational data sets. This ensures that the data used for decision-making is always up-to-date and reliable.

- Continuously review and adjust data freshness policies: Regularly assess your data freshness policies and make adjustments as needed to stay in line with your organization’s evolving needs and industry standards.

- Foster a culture of data quality awareness: Encourage a company-wide understanding of the importance of data freshness and engage stakeholders in maintaining high-quality data.

By following these best practices, you’ll ensure your data remains fresh, accurate and valuable, providing a solid foundation for informed business decisions and driving your organization’s success.

Prevent stale data disasters with Collibra

Monitoring data freshness is essential for effective data observability, and it plays a crucial role in ensuring the accuracy and reliability of business decisions.

The truth is that the risks associated with stale data not only impact the bottom line but can also significantly damage your company’s reputation — and embarrass the data professionals responsible for stewarding organizational data.

Collibra offers a comprehensive solution for managing data quality and observability, providing complete visibility into data pipelines and empowering businesses to tackle data freshness challenges head-on.

With Collibra, enterprises can proactively monitor and maintain data, ensuring it remains accurate and relevant.

Discover how Collibra Data Quality & Observability can transform your data management strategy, and take the first step toward harnessing the power of data freshness by starting a free trial today.